Developers’ point: Celonis

developers pointAn interview with Martin Klenk

Martin Klenk is Chief Technology Officer and co-founder of Celonis, a leading company in process mining and execution management technology. Martin and the team grew the company born in 2011 out of a university project in Munich for five years without external funding. Five years later, Celonis has now earned the decacorn status. We talk about the history, evolution and foreseen future of Celonis and process analytics with Martin in an interview in company with Jerome Geyer-Klingeberg (Head of the Celonis Academic Alliance).

Martin, how did it all start?

Celonis started with Basti, Alex and me [Alexander Rinke, Martin Klenk, Bastian Nominacher, from left to right in the photo below]. We began with a project that motivated us to found a company. It was 2010. Back then, the challenge was to get a grip of the IT service management processes. We decided to adopt process mining. Research work and publications were available, but existing tools did not really scale and could not cope with the volume of data with which we were confronted. We were combining usual analytics and regular data processing capabilities with the structural perspective of processes. We built the initial toolchain by combining process-oriented and data-analysis steps. That was basically the first prototype of what would have come after. Since then, the journey continued quite interestingly.

Celonis started with Basti, Alex and me [Alexander Rinke, Martin Klenk, Bastian Nominacher, from left to right in the photo below]. We began with a project that motivated us to found a company. It was 2010. Back then, the challenge was to get a grip of the IT service management processes. We decided to adopt process mining. Research work and publications were available, but existing tools did not really scale and could not cope with the volume of data with which we were confronted. We were combining usual analytics and regular data processing capabilities with the structural perspective of processes. We built the initial toolchain by combining process-oriented and data-analysis steps. That was basically the first prototype of what would have come after. Since then, the journey continued quite interestingly.

In the beginning, we were mainly focussing on IT service management — we saw it was a largely process-driven sector! Soon after, we managed to put a foothold in commercial and administrative processes, and so forth. We quickly realised that business runs if processes work well. The wave of digitisation, after all, was already starting in 2010, as more and more information was being moved from paper to digital stores. Data was there but the software to get ahold of it was still missing. Starting from that, the company, the business, and even the geographical distribution grew. Nowadays, we are about 2000 people, 500 of them in the engineering area. At present we have more than 2000 enterprise customer deployments, many of which with long-term customers and well-established large companies, from Siemens to Uber.

In 2016, very early in the process, we began building teams in the US and established a significant presence there. As in the rest of the world: at the moment, for example, we are cooperating with organisations in Japan and India!

The very first version was perhaps usable only by Alex and me. Since then, our product has followed two journeys. On the one hand, we wanted it to have more analytic capabilities, to be able to process larger amounts of data, and (more recently) handle multiple event logs and data sources – not just one table having events associated with a single case identifier but multiple objects and event tables attached to them with dedicated algorithms looking into interleaving dependencies, that is, highlighting how different processes influence each other.

We recently added the capability to handle networks of processes: we add a higher layer with a more general graph that coordinates the rest, thus allowing for bottom-up or top-down analyses at different levels of abstraction. Also, we handle multi-staged processes, which are typical for supply chains, for example. Think of a sequence of production steps: from raw materials, say steel powder, the first step ends with the production of a block of steel; then, the next step is separating the block into smaller steel bladders; next, these elements become car components. With a traditional mining approach, the dependencies could rapidly create a mess in the process graph or be disregarded – which is undesirable as customers want to understand the links among different cases. I personally think it is of high relevance because if something gets delayed or does not perform as expected, it is typically not due to a single step in particular. If you look at it in isolation, it may be working well. For example, if during the sourcing stage the cargo arrives later than expected, it may be still fine: the materials enter the warehouse and become available for later use. However, it might be that those materials were needed in the production phase, subject to a tight schedule. This entails that the second-stage process accumulates delay. The domino effects proceed with increasingly heavier effects on the processes that follow along the line. We have been largely focussing on this topic lately.

Not only are we working to make sure the platform deals with the complexity, interconnection and data volumes the users are facing with their processes, but we also want to make it usable for diverse end-user groups – from IT specialists to process analysts, from business people to domain experts… all of them should be catered for by our system to interact with our system. Business users, for instance, may not be the ones who are into programming but they are definitely the most valuable resource when it comes to interpreting the results or providing insights into the actual execution of processes. Nowadays, not only people but also systems are getting involved as stakeholders in general – let it be triggers for execution or input for automation.

Let’s have a leap back in time. You and the team of founders did not resort to a mesh-up of existing solutions for your first project but rather preferred to create your own one. What was the motivating factor that led you to prefer this path?

We did not really know how to address the problem initially. However, we were pretty experienced in software development and IT. That led us somehow to a more analytical and data-driven approach. Back in the time, we were still studying. Our motivation was driven by curiosity and a strong will to learn along the way. We were working with CIOs and thus had the chance to pick up quite a lot of information. We were ten years younger (I was 24), so there was not much that held us back. We wanted to make an impact with our ideas. It came natural to start. We all knew that if it had not worked out, there would have been another way to get another job or another opportunity after all. Our expectations on living and working standards were quite low too, after all. Our first office was Basti’s apartment – a one-bedroom apartment, to be specific. Back then, it was fine for us to sit together in a small room in front of a computer. The motivation and enthusiasm was too high to opt for alternatives.

What is Celonis today, and what were some key moments in its evolution?

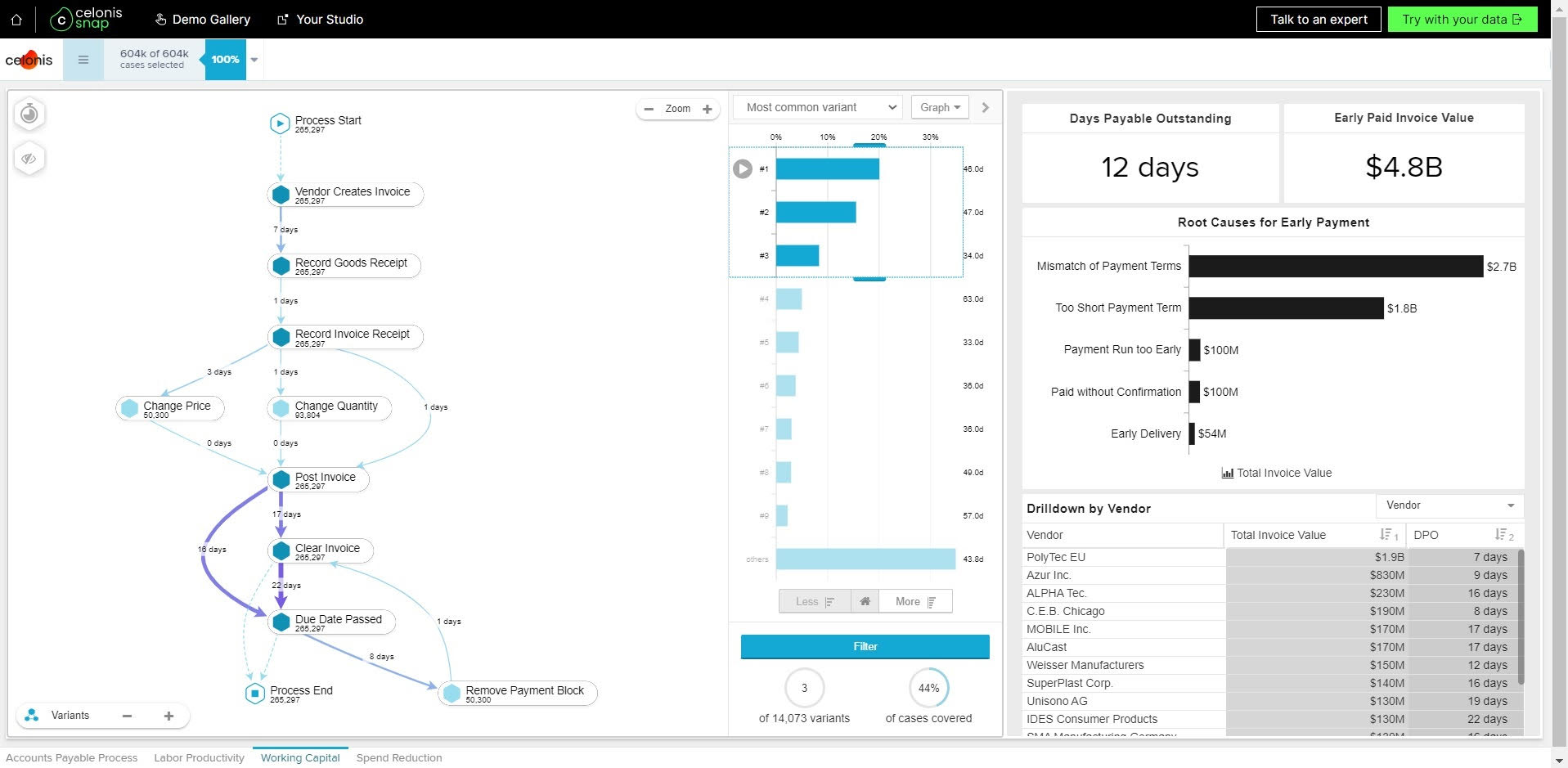

I think every generation was a step forward toward a new generation. At the beginning, the product was like an X-ray machine for diagnosing processes and their inefficiencies: you could understand what was happening in your organisation to a certain degree. That has been the value proposition for a long time. You can understand certain metrics and make them accessible even if you are not data-minded or IT-savvy.

The next pivotal moment was when we re-platformed the whole product and redesigned a large part of the infrastructure when we moved to the cloud. Since then, a very large part of the focus has been on integrating the platform with ecosystems, automation and execution of processes. This is actually the category we are on at the moment: moving from pure diagnosis, generating rich insights, to adding tools and capabilities that help you use those insights, let it be to fix problems, guide system reconfiguration, support IT transformation projects, and so on. If you work in the process mining industry, the typical outcome you get is the X-ray radiograph. Creating value out of this is the immediately following challenge.

Another perspective to that is that we have grown the product with the customers. If we had had no customer demand to guide our design and implementation, we would have probably built the wrong product. For instance, one of the first customers was Siemens. With that project we experienced quite a challenging environment, in terms of data volume and the organisation’s dimension. Challenging but opening up for a whole new range of customers we would not have been able to address.

In 2020, we added (at the request of many of our customers) therapies to the diagnosis of our X-ray machine: In October last year, we launched our Execution Management System. It provides a 360-degree view of all business processes, weaves all data insights together, and pushes actions back out into relevant systems. It lets companies connect to any underlying business systems, leverage a complete set of process improvement tools, including process mining automation, benchmark against industry standards, and identify execution gaps and inefficiencies to maximise business capacity. In short, it helps organisations to execute on their data.

In October 2021, Celonis launched the Celonis Execution Graph. It features an industry-first capability to connect events from different processes and identify friction points to give businesses a 360-degree view of their processes. Business processes are interconnected and interdependent, after all. Having a full picture of all these processes and of how they influence each other is the next step forward for execution management.

Where do you see Celonis and Process Mining in 5 years?

You never know! If you look at the industry as a whole, the execution management discipline is emerging. The idea is, rather than wiring up systems and creating reports on top of that, have one system to control and manage processes. This is going to remain the North Star in the coming years at Celonis: how can we make the data coverage bigger and extract more knowledge, how can we make it easier to adopt the system and layer more knowledge on top of that we are getting, how can we make the execution and integration with systems around easier? In a few words: what do we need to do to perfect the stack in all its levels?

Also, at a more tactical level, we observe that Celonis does not exist in isolation. We need to be able to effectively and efficiently connect with all parties. We are part of an ecosystem of companies, IT landscapes, and academia of course. By the way, we are happy to offer a free Celonis version for Academics. Let me also add that the research trend in Object-Centric Process Mining is also influencing our work.

Some trends we observed and especially became crucial with the Corona crisis is how industries are reorganising their supply chains. Companies want to assess how sustainable they are too. We are investigating how the priorities of the world are changing and how we can contribute to that. We have to be adaptive as a company and keep on understanding what the customers want to achieve. From a technological standpoint, as the integration and tool usage is moving forward, and the use cases as well, we need to add the right amount of depth and usability to the tool to still deliver value to the customers as time progresses.

By the way, if you had asked me this question 5 years ago, I don’t think I’d have been able to predict where we stand today accurately — it’s half of the company’s life, after all! [The photo above dates back to five years ago]

If you could characterise your tool in one sentence (a) without superlative adjectives (e.g., “best”, “fastest”, “most accurate”) and (b) not mentioning competitors, this sentence would be…?

This is a tough one! I would say: Celonis generates process mining insights at scale and brings these insights back to where they matter the most.

- Academic stories: Dirk Fahland

- Developers’ point: Celonis

- End-user’s corner: Aziz Yarahmadi

- ICPM 2021: Some reflections

- The XES 2.0 workshop

- Updates from the Steering Committee

- Process Mining Summer School 2022

- New course “Process Mining: From Theory to Execution”

- Questionnaire: the Process Change Exploration tool

- This article has been updated on December 17 2021, 13:47.

- An interview with Martin Klenk