10 years of XES: The story so far

XES challenge and study end-users cornerTalking with Wil van der Aalst, Joos Buijs, Boudewijn van Dongen, Christian W. Günther and Eric Verbeek

About ten years ago, the release of a new XML-based, machine-readable format to represent and store event logs was announced: the eXtensible Event Stream (XES). XES would have soon become the archetype of process mining input and then an official IEEE standard. You can find useful resources and further readings on XES on the dedicated website section of the IEEE Task Force on Process Mining. Next, we talk about its origins, use and foreseen future enhancements with the Knights of the Quest: Wil van der Aalst (in the following, WvdA), Christian W. Günther (CWG), Joos Buijs (JB), Eric Verbeek (EV) and Boudewijn van Dongen (BvD).

First things first: what led you to embark on the adventure of creating a new standard for event logs?

WvdA: XES (eXtensible Event Stream) can be seen as a successor of MXML (Mining eXtensible Markup Language), the first standard to exchange event logs. MXML was created in 2003 and was one of the main cornerstones of the first ProM version. We developed several extractors for different systems ranging from ERP systems like SAP and PeopleSoft to workflow management systems like Staffware and FLOWer. In the context of the SUPER FP6 project, we also developed SA-MXML (Semantically Annotated Mining eXtensible Markup Language), a semantic annotated version of the MXML format. The development of SA-MXML showed the limitations of MXML, because more sophisticated process mining techniques had to interpret existing XML elements in application-specific ways. These findings coincided with the establishment of the IEEE Task Force on Process Mining in October 2009 and triggered the development of the XES standard. In September 2010, the format was adopted by the IEEE Task Force on Process Mining. After a long standardization process in the IEEE, XES became an

official IEEE standard in 2016.

WvdA: XES (eXtensible Event Stream) can be seen as a successor of MXML (Mining eXtensible Markup Language), the first standard to exchange event logs. MXML was created in 2003 and was one of the main cornerstones of the first ProM version. We developed several extractors for different systems ranging from ERP systems like SAP and PeopleSoft to workflow management systems like Staffware and FLOWer. In the context of the SUPER FP6 project, we also developed SA-MXML (Semantically Annotated Mining eXtensible Markup Language), a semantic annotated version of the MXML format. The development of SA-MXML showed the limitations of MXML, because more sophisticated process mining techniques had to interpret existing XML elements in application-specific ways. These findings coincided with the establishment of the IEEE Task Force on Process Mining in October 2009 and triggered the development of the XES standard. In September 2010, the format was adopted by the IEEE Task Force on Process Mining. After a long standardization process in the IEEE, XES became an

official IEEE standard in 2016.

CWG: When I started my PhD back in 2004, one of the first things Wil had me do was to implement a tool for converting log data to MXML, which eventually became ProMimport. At that time, ProM was a Zip file on Boudewijn's hard drive, which he would email to you if you asked nicely. I think there were no more than a dozen or so people actively using it back then, and there were just a handful of sample logs in MXML that basically everyone was using for everything.

CWG: When I started my PhD back in 2004, one of the first things Wil had me do was to implement a tool for converting log data to MXML, which eventually became ProMimport. At that time, ProM was a Zip file on Boudewijn's hard drive, which he would email to you if you asked nicely. I think there were no more than a dozen or so people actively using it back then, and there were just a handful of sample logs in MXML that basically everyone was using for everything.

Both ProM, as a framework for sharing development efforts between different process mining projects, and MXML as an interchange format, were arguably designed with a very early and incomplete understanding of what process mining could, and eventually would, become. However, these design decisions proved to be hugely beneficial for the growth and development of the field.

Once we made ProM and ProMimport freely available under an open-source license, and there was more and more data available in MXML format (collected from various experiments, projects, and case studies), very soon a very active ecosystem started springing up. There was something like an exponential growth of the field, and every year there were more papers, more projects, more researchers, and more universities all over the globe involved in process mining.

With every new process mining algorithm in development, and with every additional huge log file that had been converted to MXML, slowly but surely the cracks started to show. Both ProM, as a framework, as well the MXML log format, looked like they were due for an update.

In the end, we started the process to completely redesign and rewrite ProM 6 from scratch, which was a huge undertaking involving most of the community back then. The XES format was initially designed as the corresponding upgrade for MXML, with the OpenXES reference implementation serving as the storage layer for ProM 6.

Can you tell us a “Eureka!” moment during that adventure?

JB: The XES format was under development just as I started my MSc thesis on a tool to support data extraction/conversion to XES (the tool was named XESame, of course an invention of Eric Verbeek). While brainstorming about the features and structure of XESame, I came to the realization (of course obvious in hindsight) that the log-trace-event structure is the backbone of the XES format, and process mining in general. This structure is what distinguishes process mining from data mining. Only a very minimal set of attributes is actually required for most generic process mining techniques: a sorted list of named events per trace is the only real requirement for process discovery, for instance. Even timestamps are optional but, on the other hand, required if you want to discover bottlenecks and such. Therefore, for me, the key feature is indeed the X part: extensions. This makes it very easy to endow new notions with meaning. XES luckily comes with 5 standard extensions to cover the basics and get started quickly.

During my MSc project, it was also interesting to see the changes the standard underwent in the first months, mainly in the area of the extensions. Extension prefixes, extension definitions, and the 5 standard extensions were heavily under development then. For me as an MSc student, the implemented XES standard and first OpenXES implementation were also very handy and necessary requirements to have. This allowed me to build a tool that was able to actually run and process data, without requiring a specific implementation. During my PhD different more efficient implementations were developed, but these could easily be integrated into XESame and ProM because they adhered to the standard. The implementation aspect is therefore also extensible.

CWG: I don’t remember a particular moment of enlightenment. Most of the changes from MXML were relatively straightforward, like the introduction of strongly typed attributes. It was an exciting and nerve-wracking time though, with the whole ground of the ProM framework shifting below our feet in a constant state of redesign. We could finally address all those little problems and pet peeves that had annoyed us over the years.

For one, I think that adding global attribute definitions and event classifiers was a pretty neat idea. When you are writing an event log, especially to a bespoke format like XES, you tend to have a pretty good idea of what attributes you are going to include with every trace and every event in this data set. If you declare these at the top, this can make a profound difference when reading an unknown file. Your analysis algorithms can now tell the ubiquitous attributes from the optional ones. Also, if you are going to store that data, say in a database, you now know how to structure your tables.

Event classifiers, on the other hand, give you a means to communicate to the consumer of the log what you think a good way of looking at the data could be (e.g., which attributes would make for good activity names). At the same time, you can include multiple classifiers with one set of data, so you can provide as many views at the same data set as you like, all in one compact file. Of course, anyone is free to pick a completely different view, and add that as yet another classifier on top.

The concept of extensions is ultimately what makes XES transcend a more rigid format like MXML. Whenever there is a new set of data attributes, which a group of users or algorithms can agree on how to interpret, you can introduce an XES extension defining them. Nobody stops you from declaring your own extension, and you can even have proprietary extensions just for your own use. It is certainly dangerous, in the sense that it can be overused. On the plus side, it does also give you sort of an emergency release valve, where the standard itself does not need to accommodate each and every pet use case that may arise in the future.

What are the main characteristics of XES? How to use XES files?

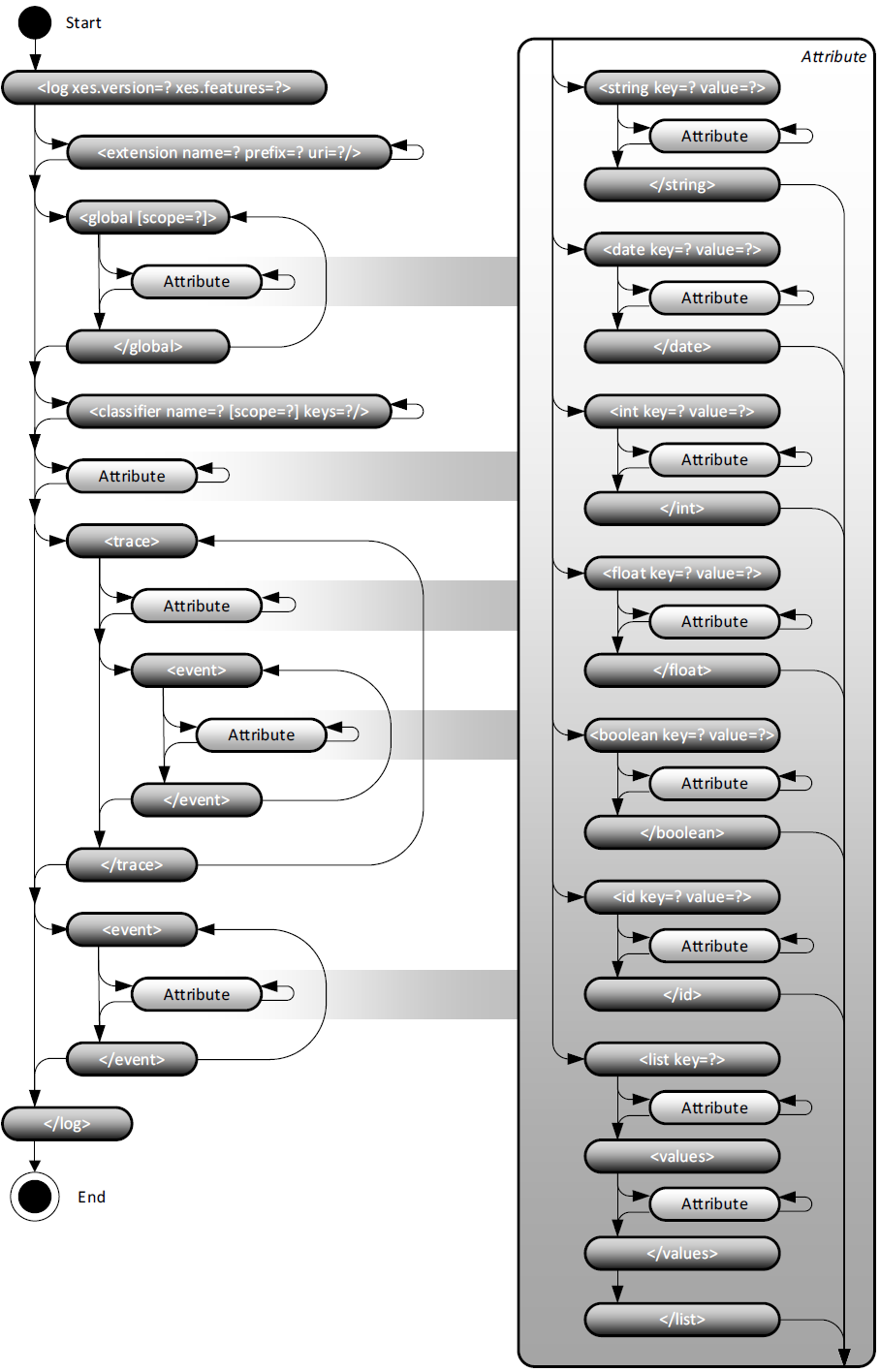

EV: A XES file contains a log, which consists of events which are possibly grouped by traces. The order in which the events occur in the log or in a trace is important, as the assumption is that this order is the same as the order in which events occurred in real-life. Data is added to an event, a trace, or the log by adding attributes to it. An attribute is a typed key-value pair, where the type can be a text, an integer number, a real number, a data and time, a boolean, or identifier, or a list of attributes. Attributes themselves can also have data.

EV: A XES file contains a log, which consists of events which are possibly grouped by traces. The order in which the events occur in the log or in a trace is important, as the assumption is that this order is the same as the order in which events occurred in real-life. Data is added to an event, a trace, or the log by adding attributes to it. An attribute is a typed key-value pair, where the type can be a text, an integer number, a real number, a data and time, a boolean, or identifier, or a list of attributes. Attributes themselves can also have data.

To provide semantics to the data, extensions are used. Every extension defines a number of keys and provides a meaning to the values associated with such a key. As an example, the “concept:name” key is defined by the concept extension on logs, traces, and events as being the name of the log, trace, or event. A collection of standard extensions is available, which includes the concept extension. Nevertheless, this collection of standard extensions is not fixed, and new extensions can be added to it.

Finally, the log also contains some meta-information on the log itself, like which extensions are used in the log, which attributes are carried by every event (or by every trace), and which classifiers there are. Classifiers are an important concept in XES, as a classifier can be used to provide the activity name associated with the event. Although the “concept:name” attribute provides you with the name of the event, the activity name may be something different. A classifier corresponds to a list of attribute keys such that the concatenated values of the corresponding attributes provide you with the activity name.

Earlier, I mentioned that the events in the log are possibly grouped by traces. In the first (non-IEEE) XES standard, it was mandatory that events were grouped by traces, but in the IEEE XES standard, traces are optional. As a result, it is possible to have a log that contains only events. This enables the standard to be used for event streams as well. To be able to tell to which case an event belongs, a trace classifier is used, which is a classifier that provides you with the case id for a given event.

CWG: The strength of a standardized format like XES is in the interchange of data between different tools and vendors. In particular, whenever you want to transfer event log data from one tool to another, or between individuals or groups, you should use XES for that. More generally though, that interchange can also be seen as being across concrete implementations, space, and time. So, if I want to read an XES file that was written by a complete stranger, ten years ago, using a Perl script, it shouldn't matter that I am using a completely different set of tools and background, and that I know nothing of the above.

As a rule of thumb, when writing XES data, you want to include as much information as you can, while sticking to the most specific and standardized interpretations possible. Your file should be easy to interpret, without limiting the general usefulness. Wherever you can use a standard extension attribute for a piece of data, you should do so. And for any view that you find useful, include a classifier for that.

In general, I think it is not as important how you use XES, but that you are using it in the first place. A standardized log format allows you to use the same data set with many different analysis tools, and move seamlessly between them. Every process mining tool has a different profile of strengths and weaknesses, and different use cases. There is also a growing ecosystem of open-source tools, and it is increasingly common for advanced users to fill in the gaps with ad hoc solutions and scripts.

It is one of the strengths of process mining that you do not have to pick one specific tool or platform to do your work, but that you can creatively integrate a patchwork of solutions, playing to their respective strengths. This openness is driving progress, allowing for solutions with a tight focus, and last but not least it drives the collaboration between different parties in industry and academia. Having the XES standard, and having broad support for it in our tool landscape, has been a major factor in that. If your process mining tool does not provide full (and, most of all, fully functional) support for XES, you should work on changing that.

A large collection of XES files is stored by the 4TU.ResearchData community. How does it work?

EV: The 4TU centre for research data is an initiative of originally three, but now four universities in The Netherlands. They realized that libraries exist to store books and articles forever, but no such storage was available for research data. Instead, most researchers stored data on their private facilities and the sharing of data was not possible or discouraged.

The 4TU centre for research data tries to change this. By publishing data with a digital object identifier, this data remains available to researchers worldwide, forever. The cost of this library for data is shared by the four universities involved. Every researcher worldwide can store up to 10 GB of data free of charge and the upload process is rather simple. Your data gets a digital object identifier and you can be sure that data is still available 15 years from today. The task force on process mining has its own collection which can be used for storing data!

BvD: Uploading data is an easy process and you can find a step-by-step tutorial on the data centre’s website. It’s important to realize that the uploader is listed as an author of the data, just like a paper. Hence if the data is properly cited, you will be recognized for contributing to the collection.

BvD: Uploading data is an easy process and you can find a step-by-step tutorial on the data centre’s website. It’s important to realize that the uploader is listed as an author of the data, just like a paper. Hence if the data is properly cited, you will be recognized for contributing to the collection.

The latter is also the biggest challenge of the data centre. We, as a community, tend to cite datasets in footnotes or by simply mentioning them in the text instead of using proper citations. We’re already doing much better than other communities though, which is why our datasets are consistently ranked as most downloaded in the annual reports!

JB: During my PhD, I managed to convince some of the PhD projects’ municipalities to publish their data. This led to a nice real dataset of 5 comparable, but not identical, event logs. This data set was key for my PhD to work on comparing event logs and discover configurable process models. However, I felt that this unique dataset would be too valuable to keep private. I’m therefore grateful that so many organizations share their real data sets, this is a key contribution to make all process mining research applicable to realistic datasets. For me, the datasets provided enough challenges anyway!

How do you imagine the future of XES? What can we expect from round the corner?

WvdA: I see two significant developments. First of all, we need to focus not only on process mining tools but also target systems that can produce events. Thus far, XES was mostly adopted by vendors of process mining tools. However, organizations are not struggling with loading data into process mining tools. Their biggest hurdle is to extract event data from widely used information systems like SAP and Salesforce. One may need several hundred lines of SQL statements to extract data for a single process. If these systems directly produce XES, the threshold to use process mining is lowered considerably. Second, we need to create exchange formats closer to the actual data in systems. We are currently focusing on object-centric process mining and have developed an initial version of the Object-Centric Event Log (OCEL) standard. In OCEL we are not limited to a single case notion, and events can refer to multiple objects of different types. OCEL sits between XES and relational tables in today’s information systems. As a result, one can discover models that are much closer to the actual processes. Most processes cannot be seen in isolation and share both events and objects. I think that this will trigger many innovations and a new breed of process mining techniques.

BvD: Additionally, I believe that it would be beneficial for the community if tools are developed to directly interact with XES data. Many of us focus on advanced algorithms that require data to be in a specific form ready for process discovery or conformance checking, but we sometimes neglect the pre-processing steps needed to get from XES data to this input.

With students now being trained in the use of Python and R for data processing, it is only logical to develop interactive or automated filtering and preprocessing techniques for XES data in Python and R. In ProM, the dotted chart and many XES filtering plugins provide the ability for users to pre-process, but these tools lack the ability to store the steps for reuse. I think XES could benefit from an extension that stores filters on top of the data.

JB: I can only support Wil’s and Boudewijn’s call for more tools that support the data pre-processing phase. Data processing is still claiming a large part of the effort of an applied process mining project. Spending 80% of the time on data processing is a realistic figure unfortunately as I experience daily at APG. If the data is ready we can almost always swiftly provide any insight that is required. However, if the data is not in the right format we cannot do much or we need to spend a lot of time to get it right. Even commercial tools do not always provide the right support in this phase, especially when non-standard systems or setups are involved.

- This article has been updated on December 22 2020, 10:18.

- Talking with Wil van der Aalst, Joos Buijs, Boudewijn van Dongen, Christian W. Günther and Eric Verbeek